AI under control: How to ensure ethical customer service

By Pedro Andrade

0 min read

Whether we are playing around with ChatGPT ourselves, or watching a new movie with an actor who passed away years ago, generative AI seems like magic. We put in a simple query and we can get an entire report written in seconds or see Carrie Fischer reprise her role as Princess Leia.

But like all magic tricks, there are rational and logical explanations as to how those outputs get created. As a customer, you can be wowed and awed, but as a customer service leader, you can’t stand with your mouth wide open. You have to make sure you can trust what your AI is saying to customers and advising your human agents to do and say.

We at Talkdesk are big believers in the transformative power of AI, but we also believe in the old adage, ‘trust, but verify’ which is why we have built robust safety measures and monitoring capabilities across our AI systems. We know customer service leaders have enough to worry about without adding rogue AI bots to the list!

Why it’s so important to trust virtual agents and agent assistants.

Ethical AI in customer experience plays a vital role as companies adopt technologies, such as autopilots (virtual agents) and copilots (agent assistants) to improve customer experience.

Autopilots interact directly with customers, automating responses, resolving common inquiries, and personalizing customer journeys. They provide immediate, 24/7 support that customers increasingly expect. In businesses where the stakes are high, such as healthcare, the accuracy of information is critical. If a healthcare AI-powered virtual agent provides inaccurate information about a prescription or treatment, the consequences could be severe. For example, incorrect advice on medication dosage could lead to adverse side effects or a medical emergency, potentially worsening the patient’s condition and requiring medical intervention. Such errors compromise the patient’s trust in the healthcare provider and increase inherent costs.

Copilots, conversely, empower human agents to resolve customer issues by offering recommendations, relevant information, and context-specific insights in real time. For example, an AI-powered copilot at a financial services company helps the agents give advice to clients. The copilot, focused on maximizing returns, suggests high-risk investments like tech startups or cryptocurrency funds to a conservative investor, known for preferring low-risk options like government bonds or stable funds without consideration for the client’s risk tolerance. This discrepancy between the client’s needs and the AI’s advice leads to potential financial harm and consequent loss of trust.

The most pressing concern for both autopilot and copilot applications is ensuring that the information provided is accurate and trustworthy. Inaccurate or misleading outputs can have serious consequences, such as damaged customer trust, compliance violations, and potentially costly errors. For AI to be genuinely valuable in these roles, it must not only deliver information accurately and promptly but also understand the context, preferences, and risk profiles of users to provide tailored and appropriate guidance.

Guide

The Talkdesk guide to responsible AI

Learn how to deliver customer service that is efficient, personalized, and secure, while maintaining transparency, fairness, and trust.

Ethical AI: The Talkdesk approach.

AI has the power to transform customer service, but it can also produce responses that range in quality and reliability. Generative AI can produce 3 types of answers:

-

Correct answers. These are accurate, reliable, and factually correct responses that address the question directly and align with the defined information.

-

Poisonous answers. These are harmful, misleading, or biased responses that can spread misinformation, promote harmful ideas, or be offensive. This usually happens when AI is hijacked through prompt injection techniques, causing it to act outside its defined instructions.

-

Hallucinated or incorrect answers. These are seemingly believable answers but factually incorrect or irrelevant, and happen when AI tries to provide an answer even when it doesn’t have enough information.

At Talkdesk, ensuring that AI systems produce ethical and trustworthy outputs is a top priority. By focusing on transparency, accuracy, and strict controls, Talkdesk AI minimizes risks while maximizing the benefits of generative AI for contact centers.

Data source reliability.

One of the most significant ways to build trust in AI outputs is by ensuring that they are derived from reliable sources. Talkdesk AI leverages large language models trained across vast datasets, similar to popular tools like ChatGPT. However, unlike general AI models that pull information from all over the internet, the Talkdesk solution is designed to extract answers specifically from trusted, curated knowledge bases.

RAG (retrieval-augmented generation) combines the strengths of retrieval-based and generative models to enhance response accuracy and relevance. It first retrieves relevant information from a large dataset or knowledge base and then uses a generative model to craft responses based on this information. This approach ensures that the generated responses are both informed by up-to-date, contextually relevant data and tailored to the specific query.

For example, a patient uses the healthcare provider’s autopilot to ask about appropriate actions for managing a new prescription medication. The AI system, trained on a wide range of medical knowledge, pulls specific, verified information from the provider’s curated and validated medical knowledge base. This knowledge base includes up-to-date drug information, dosage guidelines, potential side effects, and interactions all sourced from authoritative medical literature and the provider’s clinical guidelines.

This ensures that responses are accurate and relevant to the business and its customers. Talkdesk eliminates the risk of AI generating misleading or incorrect information by restricting data sources to verified, domain-specific content.

Simulation and testing—sandbox environment.

Trusting AI also means having the ability to test it thoroughly. Talkdesk offers a simulation sandbox to experiment with AI outputs before deploying them. This environment allows businesses to input different answers and scenarios, observe how the AI responds, and make adjustments as needed–all without requiring extensive technical knowledge.

Businesses can customize guardrails to prevent the AI from delivering certain types of responses, ensuring that the virtual agent or agent assistant aligns with company guidelines. Talkdesk AI Trainer™ uses additional guardrails to ensure the autopilot and copilot say specific things and prevent them from spreading malicious, harmful information. Simulating real-world interactions and refining the behavior of AI ensures that the technology meets specific standards for accuracy and relevance.

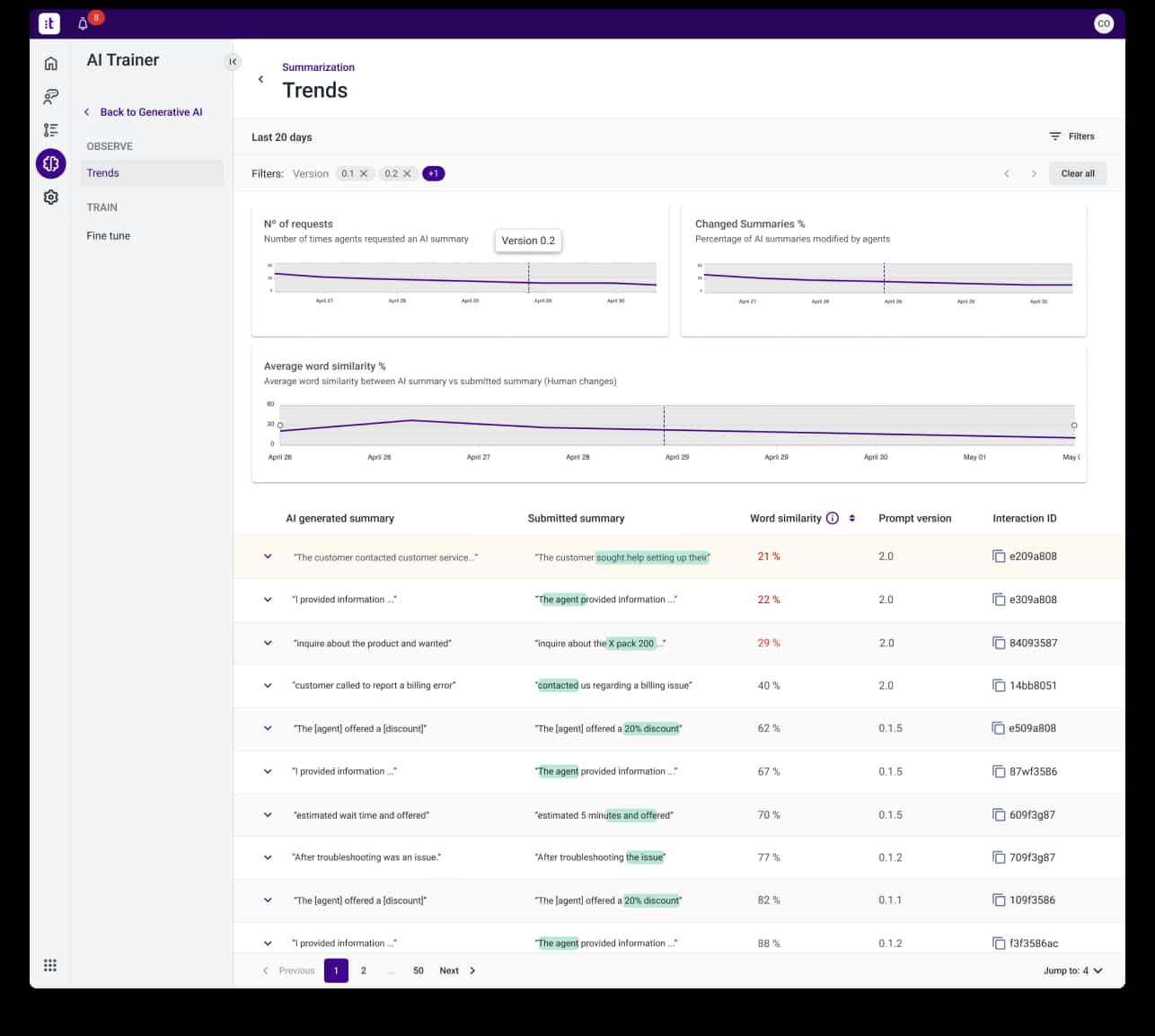

Continuous monitoring to ensure the ethical use of AI.

Even after deploying the AI solution, monitoring its performance is essential to maintaining trust. Talkdesk provides custom dashboards that offer real-time insights into the performance of AI models. These dashboards highlight potential issues, allowing businesses to make immediate adjustments if necessary.

Additionally, they provide ongoing metrics used to track performance over time, confirming that the AI is working as expected. This continuous monitoring layer also allows businesses to report on AI reliability, ensuring that compliance and accuracy standards are consistently met.

Ethical AI: Addressing the challenges of ethical AI in customer service.

Generative AI holds immense potential in the customer experience industry. However, structures that provide confidence in what the AI is doing are crucial—ensuring that it delivers accurate information and doesn’t inadvertently lead to harmful or biased outcomes. Businesses must invest in reliable knowledge bases, establish comprehensive testing procedures, and continuously monitor AI performance. They need to strike the right balance between leveraging AI’s capabilities and maintaining the accuracy, ethics, and reliability that customers expect.

See how Talkdesk can help you benefit from the potential of generative AI while ensuring accuracy and reliability! Request a demo today!